(Via ScienceNode) Turbulence is present in most fluids, like the ocean currents, the air around aircraft wings, the blood in our arteries or smoke from chimneys. Since turbulence is so prevalent, its study has many industrial and environmental applications. For instance, scientists model turbulence to improve vehicle design, diagnose atherosclerosis, build safer bridges, and reduce air pollution.

Caused by excess energy that cannot be absorbed by a fluid’s viscosity, turbulent flow is by nature irregular and therefore hard to predict. The speed of the fluid at any point constantly fluctuates in both magnitude and direction, presenting researchers with a long-standing challenge. The need to follow fluid lumps in time, space, and scale results in equations that generate too much information. Even now, only a small part of the flow will fit in a computer simulation.

Scientists use models to make up the missing part. However, if those models are imprecise, then the simulation is wrong and no longer represents the flow that it is attempting to simulate. Recent research published in Science by the Fluid Dynamics Group at Universidad Politécnica de Madrid (UPM) attempts to gain new insights into the physics behind turbulent flows and reduce the gaps between simulated flows and the flows around real devices. “A main source of discrepancy between computer-modeled flows and the flow around a real airplane is given by the poor performance of the models,” says José I. Cardesa, the first author of the work. The Fluid Dynamics Group at UPM is led by Javier Jiménez and has been studying fluid dynamics using RES supercomputing resources since 2006.

An underlying simplicity

In 1940s, mathematician Andrey Kolmogorov proposed that turbulence occurs in a cascade. A turbulent flow contains whirls of many different sizes. According to Kolmogorov, energy is transferred from the large whirls to smaller and more numerous whirls, rather than dispersing to farther distances. But, Cardesa says, the chaotic behavior of a fluid makes it hard to observe any trend with the naked eye.

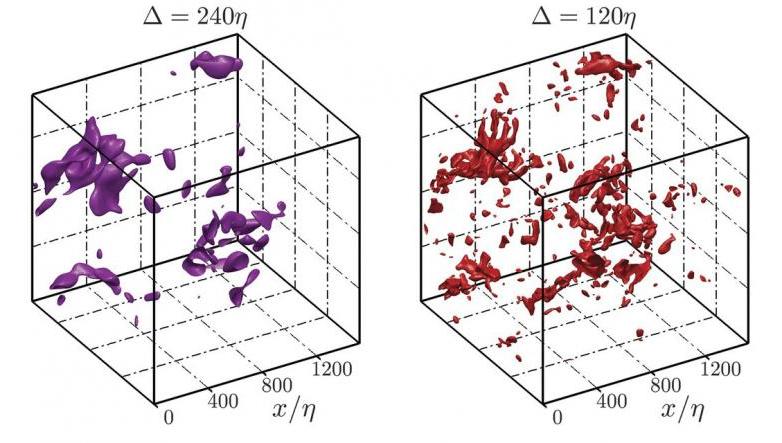

Hoping to track individual eddy structures and determine if a recurrent behavior is at work in how turbulence spreads, researchers from Fluid Dynamics Group at UPM simulated turbulent flow using the MinoTauro cluster at the Barcelona Supercomputing Center.

The code was run in parallel on 32 NVIDIA Tesla M2090 cards, using a hybrid CUDA-MPI code developed by Alberto Vela-Martin. The simulation took almost three months to complete and resulted in over one hundred terabytes of compressed data.

Progress in analyzing the stored simulation data was initially slow, until researchers adjusted the code so it would fit on a single node of a computer cluster with 48 GB of RAM per node. This way, they could run the process independently on twelve different nodes and was able to complete the task within just one month.

Their results validated Kolmogorov’s theory, revealing an underlying simplicity in the apparently random motion of turbulent wind or water. The next step may be to try to understand the cause of the trend detected or to implement the new insights into flow simulation software.

The present work has benefited from advances in computational speed and storage capacity. Cardesa points out that his work would have been possible about ten years ago, but the expense would have been such that it would have required a ‘heroic’ computational effort. “The reduced cost of technology has made it possible for us to play with these datasets. This is an extremely useful situation to be in when doing fundamental research and throwing all our efforts at an unsolved problem.”

Reference

Cardesa, J.I., Vela-Martín, A. and Jiménez, J., 2017. The turbulent cascade in five dimensions. Science, p.eaan7933. DOI: 10.1126/science.aan7933